Not Just A-levels: Unfair Algorithms Are Being Used To Make All Sorts Of Government Decisions

5th September 2020

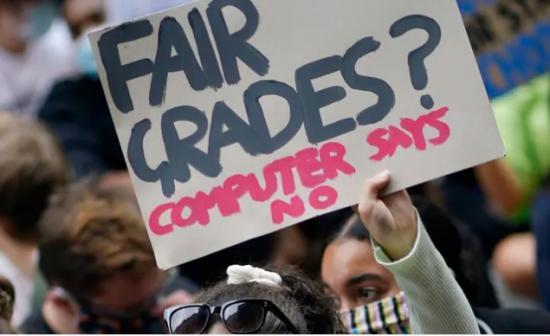

The recent use of an algorithm to calculate the graduating grades of secondary school students in England provoked so much public anger at its perceived unfairness that it's widely become known as the "A-levels fiasco". As a result of the outrage - and the looming threat of legal action - the government was forced into an embarrassing U-turn and awarded grades based on teacher assessment.

Prime Minister Boris Johnson has since blamed the crisis on what he called the "mutant" algorithm. But this wasn't a malfunctioning piece of technology. In marking down many individual students to prevent high grades increasing overall, the algorithm did exactly what the government wanted it to do. The fact that more disadvantaged pupils were marked down was an inevitable consequence of prioritising historical data from an unequal education system over individual achievement.

But more than this, the saga shouldn't be understood as a failure of design of a specific algorithm, nor the result of incompetence on behalf of a specific government department. Rather, this is a significant indicator of the data-driven methods that many governments are now turning to and the political struggles that will probably be fought over them.

Algorithmic systems tend to be promoted for several reasons, including claims that they produce smarter, faster, more consistent and more objective decisions, and make more efficient use of government resources. The A-level fiasco has shown that this is not necessarily the case in practice. Even where an algorithm provides a benefit (fast, complex decision-making for a large amount of data), it may bring new problems (socio-economic discrimination).

Algorithms all over

In the UK alone, several systems are being or have recently been used to make important decisions that determine the choices, opportunities and legal position of certain sections of the public.

At the start of August, the Home Office agreed to scrap its visa "streaming tool" designed to sort visa applications into risk categories (red, amber, green) indicated how much further scrutiny was needed. This followed a legal challenge from campaign group Foxglove and the Joint Council for the Welfare of Immigrants charity, claiming that the algorithm discriminated on the basis of nationality. Before this case could reach court, Home Secretary Priti Patel pledged to halt the use of the algorithm and to commit to a substantive redesign.

The Metropolitan Police Service's "gangs matrix" is a database used to record suspected gang members and undertake automated risk assessments. It informs police interventions including stop and search, and arrest. A number of concerns have been raised regarding its potentially discriminatory impact, its inclusion of potential victims of gang violence, and its failure to comply with data protection law.

Many councils in England use algorithms to check benefit entitlements and detect welfare fraud. Dr Joanna Redden of Cardiff University's Data Justice Lab has found a number of authorities have halted such algorithm use after encountering problems with errors and bias. But also, significantly, she told the Guardian there had been "a failure to consult with the public and particularly with those who will be most affected by the use of these automated and predictive systems before implementing them".

This follows an important warning from Philip Alston, the UN special rapporteur for extreme poverty, that the UK risks "stumbling zombie-like into a digital welfare dystopia". He argued that too often technology is being used to reduce people's benefits, set up intrusive surveillance and generate profits for private companies.

The UK government has also proposed a new algorithm for assessing how many new houses English local authority areas should plan to build. The effect of this system remains to be seen, though the model seems to suggest more houses should be built in southern rural areas, instead of the more-expected urban areas, particularly northern cities. This raises serious questions of fair resource distribution.

Why does this matter?

The use of algorithmic systems by public authorities to make decisions that have a significant impact on our lives points to a number of crucial trends in government. As well as increasing the speed and scale at which decisions can be made, algorithmic systems also change the way those decisions are made and the forms of public scrutiny that are possible.

This points to a shift in the government's perspective of, and expectations for, accountability. Algorithmic systems are opaque and complex "black boxes" that enable powerful political decisions to be made based on mathematical calculations, in ways not always clearly tied to legal requirements.

This summer alone, there have been at least three high-profile legal challenges to the use of algorithmic systems by public authorities, relating to the A-level and visa streaming systems, as well as the government's COVID-19 test and trace tool. Similarly, South Wales Police's use of facial recognition software was declared unlawful by the Court of Appeal.

While the purpose and nature of each of these systems are different, they share common features. Each system has been implemented without adequate oversight nor clarity regarding their lawfulness.

Failure of public authorities to ensure that algorithmic systems are accountable is at worst a deliberate attempt to hinder democratic processes by shielding algorithmic systems from public scrutiny. And at best, it represents a highly negligent attitude towards the responsibility of government to adhere to the rule of law, to provide transparency, and to ensure fairness and the protection of human rights.

With this in mind, it is important that we demand accountability from the government as it increases its use of algorithms, so that we retain democratic control over the direction of our society, and ourselves.

-------------------------------------------------------------------------------

This article was published on The Conversation web site on 3rd September 2020. To read the article with many links to research and date go HERE

--------------------------------------------------------------------------------

More

The article above is written using English perspectives but don't think Scotland is free of the problems that may be built into systems using algorithms. Here are a few links -

Decision-making in the Age of the Algorithm

Algorithms are at the heart of AI and can be useful tools to automate decisions, however, many algorithms exhibit bias and discrimination against women and ethnic minorities.

Close the Gap briefing for Scottish Government debate Artificial Intelligence and Data Driven Technologies: Opportunities for the Scottish Economy and Society

10-principles-for-public-sector-use-of-algorithmic-decision-making