Ofcom: Tech firms must up their game to tackle online harms against women and girls

25th November 2025

New industry guidance from Ofcom demands practical action against online misogynistic abuse, pile-ons, stalking and intimate image abuse.

Ofcom sets out five-point plan to hold sites and apps to account on protecting women and girls online.

Sport England and WSL Football welcome guidance, calling for better protection for sportswomen on social media.

Online safety watchdog, Ofcom, today launches new industry guidance demanding that tech firms step up to deliver a safer online experience for millions of women and girls in the UK.

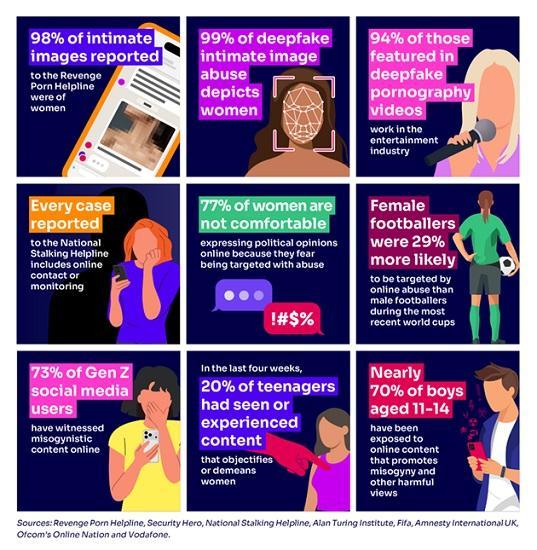

Women and girls face distinct and serious risks online, including misogynistic abuse, sexual violence, coordinated pile-ons, stalking, coercive control and intimate image abuse.

Ofcom's guidance, published today, includes a wide range of practical safety measures that the regulator is urging tech firms to adopt to tackle these harms. These go above and beyond what is needed to comply with their legal duties under the Online Safety Act, setting a new and ambitious standard for women's and girls' online safety.

The guidance was developed with insights from victims, survivors, safety experts, women's advocacy groups and organisations working with men and boys. Its launch is also supported by Sport England as part of their wider This Girl Can campaign, and WSL Football to raise awareness of women’s safety when taking part in sport and exercise.

Ofcom has today written to sites and apps setting an expectation that they start to take immediate action in line with the guidance. We will also publish a future report to reveal how individual companies respond.

Being a woman online

Women from all walks of life and backgrounds face disproportionate levels of harm online, which have wide-reaching effects. They limit women and girls’ ability to safely take part in online spaces, freely express themselves online, or even to do their jobs. They also contribute to the spread and normalisation of harmful attitudes towards women and girls.

New industry guidelines to address gender-based harms

Ofcom’s practical guidance, supported by case-study examples, sets out where tech companies can and should do more, while taking account of important human rights including freedom of expression and privacy. Focusing on the following four main areas of harm, our guidance makes clear how we expect services to design and test their services with safety in mind, and improve their reporting tools and support systems to better protect women and girls:

Misogynistic abuse and sexual violence. This includes content that spreads hate or violence against women, or normalises sexual violence, including some types of pornography. It can be both illegal or harmful to children and is often pushed by algorithms towards young men and boys. Under our guidance, tech firms should consider:

introducing ‘prompts’ asking users to reconsider before posting harmful content;

imposing ‘timeouts’ for users who repeatedly attempt to abuse a platform or functionality to target victims;

promoting diverse content and perspectives through their recommender ‘for you’ systems to help prevent toxic echo chambers; and

de-monetising posts or videos which promote misogynistic abuse and sexual violence.

Pile-ons and coordinated harassment. This happens when groups gang up to target a specific woman or group of women with abuse, threats, or hate. Such content may be illegal or harmful to children and often affects women in public life. Under our guidance, tech firms should consider:

setting volume limits on posts ("rate limiting") to help prevent mass-posting of abuse in pile-ons;

allowing users to quickly block or mute multiple accounts at once; and

introducing more sophisticated tools for users to make multiple reports and track their progress.

Stalking and coercive control. This covers criminal offences where a perpetrator uses technology to stalk an individual or control a partner or family member. Under our guidance, tech firms should consider:

‘bundling’ safety features to make it easier to set accounts to private;

introducing enhanced visibility restrictions to control who can see past and present content;

ensuring stronger account security; and

remove geolocationby default.

Image-based sexual abuse. This refers to criminal offences involving the non-consensual sharing of intimate images and cyberflashing. Under our guidance, tech firms should consider:

using automated technology known as ‘hash-matching’ to detect and remove non-consensual intimate images;

blurring nudity, giving adults the option to override;

signposting users to supportive information including how to report a potential crime.

More broadly, we expect tech firms to subject new services or features to ‘abusability’ testing before they roll them out, to identify from the outset how they might be misused by perpetrators. Moderation teams should also receive specialised training on online gender-based harms.

Companies are expected to consult with experts to design policies and safety features that work effectively for women and girls, while continually listening and learning from survivors’ and victims’ real-life experiences - for example through user surveys.

What happens now?

Ofcom is setting out a five-point action plan to drive change and hold tech firms to account in creating a safer life online for women and girls. We will:

Enforce services’ legal requirements under the Online Safety Act

We’ll continue to use the full extent of our powers to ensure platforms meet their duties in tackling illegal content, such as intimate image abuse or material which encourages unlawful hate and violence.

Strengthen our industry Codes

As changes to the law are made, we will further strengthen our illegal harms industry Codes measures. We’re already consulting on measures requiring the use of hash-matching technology to detect intimate image abuse and our Codes will also be updated to reflect cyberflashing becoming a priority offence, next year.

Drive change through close supervision.

We have today written an open letter to tech firms as the first step in a period of engagement to ensure they take practical action in response to our guidance. We plan to meet with companies in the coming months to underline our expectations and will convene an industry roundtable in 2026.

Publicly report on industry progress to reduce gender-based harms

We’ll report in summer 2027 on progress made by individual providers, and the industry as a whole, in reducing online harms to women and girls. If their action falls short, we will consider making formal recommendations to Government on where the Online Safety Act may need to be strengthened.

Champion lived experience

The voices of victims, survivors and the expert organisations which support them will remain at the heart of our work in this area. We will continue listen to their experiences and needs through our ongoing research and engagement programme.

Dame Melanie Dawes, Ofcom’s Chief Executive, said: "When I listen to women and girls who’ve experienced online abuse, their stories are deeply shocking. Survivors describe how a single image shared without their consent shattered their sense of self and safety. Journalists, politicians and athletes face relentless trolling while simply doing their jobs. No woman should have think twice before expressing herself online, or worry about an abuser tracking her location.

"That’s why today we are sending a clear message to tech firms to step up and act in line with our practical industry guidance, to protect their female users against the very real online risks they face today. With the continued support of campaigners, advocacy groups and expert partners, we will hold companies to account and set a new standard for women’s and girls’ online safety in the UK."

Sports Minister Stephanie Peacock said: “The level of abuse aimed at sportswomen on social media is horrific and evidence shows that women and girls experience unique risks online. Female sports stars have spoken out about the abuse they face while simply trying to perform at the top of their game.

“Our new Women’s Sport Taskforce was set up during the Women's Rugby World Cup to help tackle exactly these kinds of issues and at its first meeting we heard from world experts about the specific challenges female athletes face. I’d like to join Ofcom and Sport England in urging tech companies to step up and stamp it out."

Technology Secretary Liz Kendall said: “Tech companies have the ability and the technical tools to block and delete online misogyny. If they fail to act, they’re not just bystanders, they’re complicit in creating spaces where sexism festers and a society where abuse against women and girls becomes normalised.

"I welcome the steps Ofcom has taken to drive this change. Now it’s time for platforms to take responsibility and use every lever to protect women and girls online."

Chris Boardman, Chair, Sport England said: “Toxic online abuse has terrible offline impacts. As women’s sport grows, so does the abuse of its stars, and that affects women from every walk of life. This Girl Can research shows us that for many women and girls, fear of judgment is a huge deterrent to them exercising - and the horrifying abuse of our athletes makes this worse. The hard-won gains in women’s sport must not be destroyed by misogyny, so we’re supporting Ofcom in order to protect women & girls’ participation.”

Nikki Doucet, CEO, WSL Football said: "Abuse directed at female athletes is persistent and horrific. We have engaged in honest, constructive discussions with social media companies to find ways to drive meaningful change, and we welcome Ofcom’s guidance released today which represents another vital step forward, putting accountability and practical solutions at the forefront. This represents a potentially game changing moment towards protecting our athletes and women in sport for the future."

Ellie Butt, Head of Policy and Public Affairs at Refuge, said: “At Refuge, we see firsthand how widespread tech-facilitated abuse now is, and the devastating impact it has on survivors. Ofcom’s guidance marks a welcome step towards tackling misogyny and domestic abuse in digital spaces, but meaningful protection for women and girls will rely on tech companies engaging fully with the guidance and effectively putting it into practice.

“We are proud that Refuge’s Survivor Panel helped shape the guidance, drawing attention to the critical need to stop perpetrators from setting up multiple anonymous accounts and the importance of safety by design.

“It is now vital that tech companies take a proactive approach to preventing abuse and removing harmful content. Refuge hopes to see robust monitoring of compliance, and a commitment from Government that if tech companies do not step up, they will make this crucial guidance legally enforceable so that all women and girls get the protection they deserve.”

Source

https://www.ofcom.org.uk/online-safety/illegal-and-harmful-content/ofcom-tech-firms-must-up-their-game-to-tackle-online-harms-against-women-and-girls